I’ve been staring at the DAGKnight whitepaper for so long that the mathematical notation is starting to burn into my retina. There is a massive, often painful gap between “mathematically sound” and “runs on a CPU without melting it.” We’re sitting here on New Year’s Eve, and while everyone else is popping champagne, I’m thinking about graph connectivity and topological sorting.

Here’s the situation. We finally have a paper-faithful implementation of the DAGKnight protocol. It exists. It compiles. It passes the logic tests. But getting it to actually perform in a live environment? That’s a whole different beast.

The “Paper-Faithful” Trap

Academic papers are great. They assume you have infinite time and spherical cows in a vacuum. When you implement a consensus protocol like DAGKnight strictly according to the definition, you end up with something that is logically correct but computationally heavy. Extremely heavy.

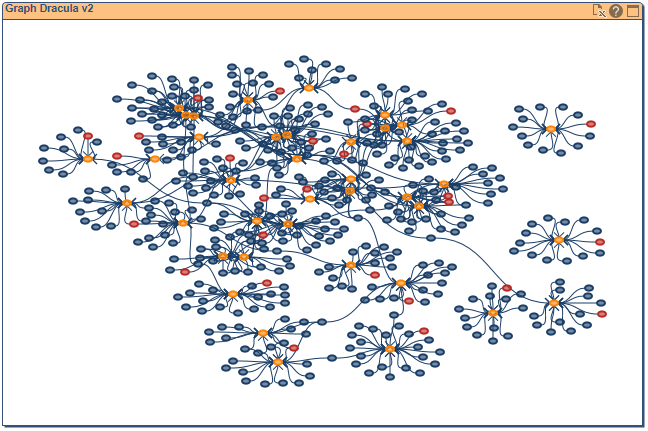

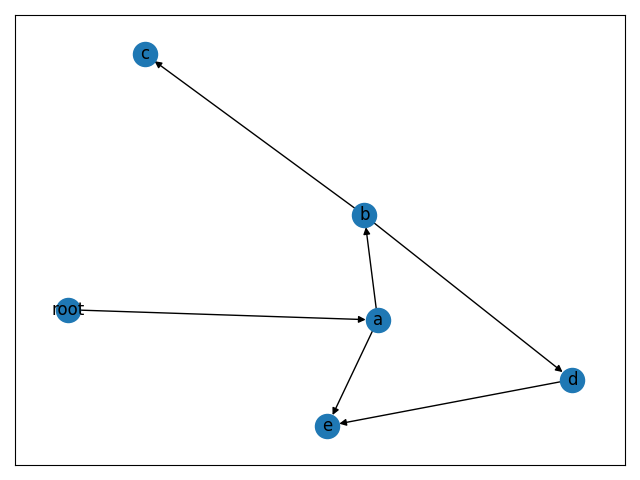

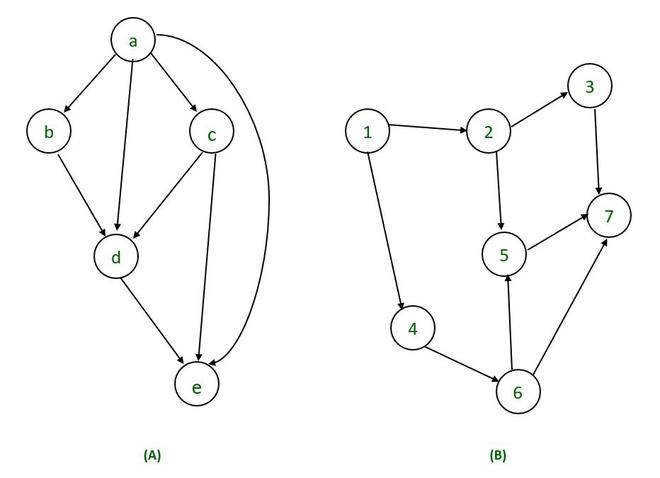

I ran some initial benchmarks on the rank calculation logic. For small graphs, it’s fine. But throw 10,000 blocks at it? The latency spikes are nasty. The protocol relies on complex traversals to determine the ordering of blocks in the DAG (Directed Acyclic Graph), and doing this naively for every new block is just asking for trouble.

It’s not just about “optimizing code.” It’s about algorithmic complexity. We need to handle high throughput—I’m talking 5 blocks per second (BPS) or more. If the node spends 200ms just calculating the rank of a new block, we’re dead in the water before we even start.

The Rank Calculation Bottleneck

The specific pain point right now is the rank calculation on large datasets. In GhostDAG (the predecessor), things were heavy but manageable. DAGKnight introduces more rigorous ordering constraints to solve some edge cases in security, but that comes at a cost.

Here is a simplified view of what the naive implementation looks like. It walks the graph way too often:

fn calculate_rank_naive(block: &Block, dag: &Dag) -> u64 {

// This is the "paper faithful" version

// It hurts to look at because it's O(pain)

let mut max_parent_rank = 0;

// We are re-traversing deep history here unnecessarily

for parent in &block.parents {

let parent_rank = dag.get_rank(parent);

if parent_rank > max_parent_rank {

max_parent_rank = parent_rank;

}

// The DAGKnight specific condition checks are heavy

if !check_k_cluster_condition(parent, dag) {

// expensive recursive check

}

}

max_parent_rank + 1

}See that loop? In a high-BPS network, a block might have dozens of parents, and checking cluster conditions recursively destroys performance. I’ve noticed that for values larger than 10k, this slows to a crawl.

We haven’t even started the real optimization work yet. The plan for January is to rip this apart. We need memoization, better caching of the anticone (the set of blocks that are neither ancestors nor descendants), and probably a completely different data structure for storing the graph in memory.

The Hard Fork Nightmare

Then there’s the activation. Writing the protocol is one thing; switching a running network from GhostDAG to DAGKnight is another. It’s like changing the engine of a car while doing 100 mph on the highway.

The hard fork activation code isn’t done. It’s untested. The theoretical boundary where the network agrees to switch rulesets is terrifying. If one node lags behind or calculates the “blue score” (the cumulative work) slightly differently during the transition, you get a chain split. A bad one.

I’m looking at implementing a dual-verification window where nodes validate blocks against both protocols for a set period. It’s paranoid, but I prefer paranoia to a stalled network.

// Potential activation logic structure

fn validate_block(block: &Block, current_daa_score: u64) -> Result<(), ValidationError> {

let fork_activation_score = 50_000_000; // Arbitrary future point

if current_daa_score < fork_activation_score {

return verify_ghostdag(block);

} else {

// The danger zone

// If DAGKnight state isn't perfectly prepped, we panic here

return verify_dagknight(block);

}

}Stress Testing: The 5 BPS Goal

Why do we do this to ourselves? Because speed matters. We want to push the testnet to 5 BPS next month. That sounds low if you’re used to centralized database numbers, but for a decentralized, proof-of-work DAG? That’s blazing fast. It means dealing with massive amounts of orphan blocks and sorting them out in real-time.

I’m honestly curious to see what breaks first. Will it be the network layer flooding with block headers? Or will it be the CPU choking on the topological sort? My money is on the CPU. The computations required to maintain the DAG structure at that velocity are non-trivial.

We’re going to need to profile the hell out of this in January. I expect to see flame graphs that are mostly red. But that’s the process. You build the faithful version, you watch it fail under load, and then you optimize the bottlenecks until it screams.

Moving Forward

So, that’s where we are. The code is on GitHub, it matches the paper, and it needs a lot of love before it’s ready for prime time. If you’re into Rust and graph theory, take a look at the rank calculation logic. It’s the current enemy number one.

I’m going to grab a coffee and maybe stop staring at this recursive function for a few hours. Next month is going to be chaotic, but hopefully, by the time we hit the testnet, we’ll have shaved those milliseconds down to microseconds.