Well, I’ve got to admit, I was staring at a traceroute output at 2:15 AM last Tuesday, trying to figure out why our Singapore-to-Sydney latency had suddenly jumped by 28 milliseconds. No packet loss. No jitter. Just a clean, flat, consistent increase that ruined the real-time replication for our database cluster.

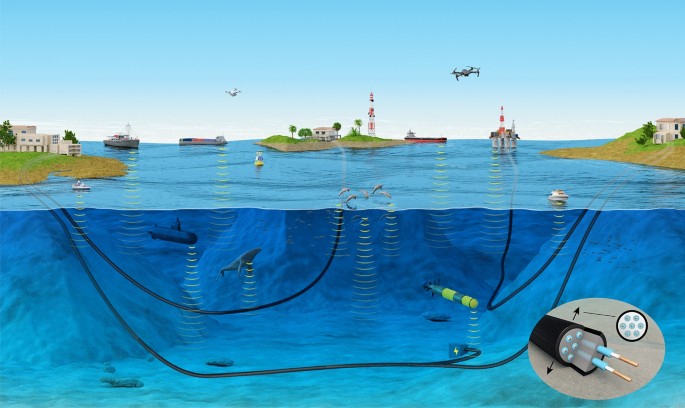

My first thought? A fiber cut. Usually, when a shark (or a trawler) chews through a submarine cable, traffic fails over to a backup route that’s longer and more congested. Standard stuff. I’ve seen it a dozen times.

But this wasn’t a cut. The primary link was up. The traffic was just… taking the scenic route. Intentionally.

And let me tell you, as someone who has to explain these latency penalties to angry product managers, it’s a headache.

The “Friendship” Tax on Your Packets

Here’s the reality nobody puts in the marketing slide decks: secure routing costs time. Light in glass travels at roughly two-thirds the speed of light in a vacuum. Every kilometer you add to avoid a “high-risk” region adds microseconds. Route around an entire sea? You’re talking double-digit milliseconds.

In the case of my Singapore-Sydney mystery, our provider had shifted traffic off a direct legacy cable that passed through contested waters and onto a newer, “trusted” system that looped further east. The physical distance was longer. The physics didn’t care about the security clearance of the landing station. It just took longer to get there.

I ran a quick comparison using mtr (v0.96) on our staging box running Ubuntu 24.04. The difference was stark.

- Old Route (cached metrics): 92ms average

- New “Secure” Route: 121ms average

That ~30ms increase doesn’t sound like much until you’re trying to run synchronous writes across a distributed SQL database. Then it’s an eternity. We had to tweak our timeout configurations just to stop the application from flapping.

Hub Centrality is Shifting

But this wasn’t a cut. The primary link was up. The traffic was just… taking the scenic route. Intentionally.

We are seeing a massive shift toward what I call “political redundancy.” New hubs are popping up in places that make zero sense geographically but perfect sense geopolitically. Look at the recent cable landings in Perth or the aggressive build-out of the “Polar” routes avoiding traditional chokepoints. We are decentralizing the physical layer, not for performance, but for insurance.

Detecting the Shift (Python Script)

Since I can’t trust the providers to announce these shifts in advance (seriously, read the fine print in your SLA—they guarantee uptime, not pathing), I wrote a quick Python script to monitor AS path changes and latency deviations. It helps pinpoint when a route has been politically “optimized” without our consent.

I’m running this on Python 3.13.2. It uses scapy to probe specific hops.

import sys

from scapy.all import sr1, IP, ICMP, conf

import time

# Suppress scapy verbosity

conf.verb = 0

def measure_route_health(target_ip, expected_hops):

"""

Checks if we are taking the expected path and measures RTT.

"""

print(f"--- Probing {target_ip} ---")

start_time = time.time()

packet = IP(dst=target_ip, ttl=20)/ICMP()

reply = sr1(packet, timeout=2)

end_time = time.time()

if reply is None:

print("!! No reply. Packet lost or filtered.")

return

rtt_ms = (end_time - start_time) * 1000

print(f"Current RTT: {rtt_ms:.2f}ms")

# Simple heuristic: If RTT jumps > 20% over baseline, alarm.

baseline = 95.0 # ms

if rtt_ms > (baseline * 1.2):

print(f"!! LATENCY SPIKE DETECTED: +{rtt_ms - baseline:.2f}ms")

print("!! Possible route shift to non-optimized path.")

else:

print("Latency within normal parameters.")

if __name__ == "__main__":

# Example target

target = "203.0.113.45"

measure_route_health(target, 15)I run this as a cron job every 5 minutes. It’s crude, but it saved my bacon last week. When the alert fired, I knew exactly which provider to call and yell at. Not that they moved the traffic back—they claimed it was “maintenance”—but at least I knew it wasn’t my firewall config.

The “Humboldt” Effect

One specific example that’s been fascinating to watch this year is the traffic flow across the South Pacific. Since the Humboldt cable system (connecting Chile to Australia/New Zealand) fully lit up, I’ve noticed a strange routing phenomenon.

Previously, traffic from South America to APAC almost exclusively went North to the US, then across the Pacific. It was a massive hairpin. Now, with the direct Southern route, you’d expect lower latency. And you get it—physically.

But here’s the gotcha: Peering politics.

Even though the cable is there, I’ve seen packets from Santiago hit Sydney and then bounce back to Los Angeles to hand off to a specific Tier 1 provider before coming back to Asia. Why? Because the commercial peering agreements at the new landing stations haven’t matured yet. We built the “friendly” physical pipe, but the business logic of BGP hasn’t caught up.

So we have a billion-dollar cable sitting on the ocean floor, and my packets are ignoring it because two massive telcos can’t agree on a settlement-free peering price in Valparaíso. Classic.

What You Can Do About It

My advice? Diversify your transit. Don’t just buy two links from different providers; check the actual cable systems they ride on. If Provider A and Provider B both ride the same “trusted” cable system because regulations forced them to abandon the legacy route, you don’t have redundancy. You have a single point of failure with two different logos on the bill.

I’ve started explicitly asking for “diverse cable system” clauses in our contracts. Most sales reps look at me like I’m speaking Martian, but a few get it. It costs more. A lot more. But when the next geopolitical spat causes a route flap, at least my database won’t time out.