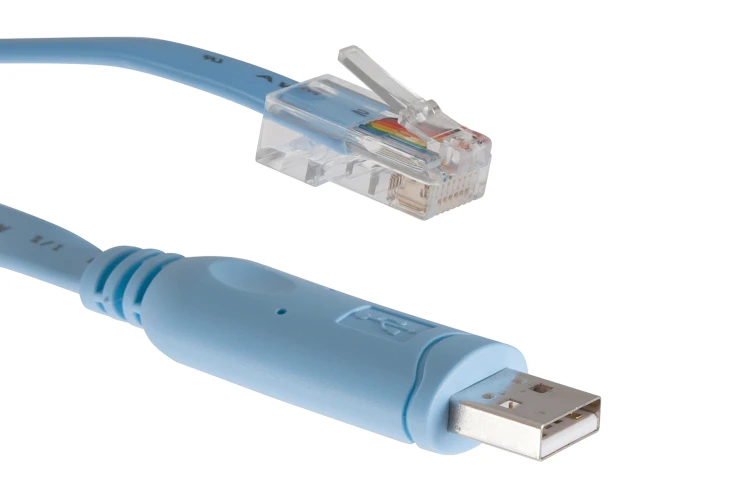

I still have a drawer full of them. You know the ones. Those baby-blue console cables that seem to multiply when you aren’t looking. For the first decade of my career, that cable was my lifeline. If I wasn’t sitting on a freezing cold floor in a server closet with a laptop balanced on my knees, staring at a VT100 terminal, I wasn’t “really” working.

But honestly? I’m done with it.

It’s February 2026, and if I have to physically plug into a switch to configure a VLAN, something has gone horribly wrong. The shift to cloud-managed enterprise switching wasn’t just a “nice to have” upgrade; it was a survival mechanism for those of us managing distributed networks. Well, that’s not entirely accurate — I remember being skeptical when the first cloud-managed switches started gaining traction. The idea of the management plane living on the internet while the data plane stayed local felt… risky.

I was wrong. Mostly.

The “Single Pane of Glass” Isn’t Just Marketing Fluff

Here’s the thing about managing switches at scale: CLI is great until you have 500 switches across 40 sites. Then it becomes a nightmare of copy-paste errors and “wait, did I save the config on the switch in the breakroom?”

I recently deployed a stack of cloud-managed switches for a client expanding into a new regional office. We’re talking about 40+ access switches. In the old days, this was a three-day job. Unbox, rack, console in, paste config, reboot, verify, label.

But this time? I pre-configured everything using templates before the hardware even arrived at the site. And when the installers plugged them in last Tuesday, they pulled their config, updated their firmware to the latest stable build (running 16.4.1 right now, which has been surprisingly stable compared to the mess that was 15.X), and just worked.

The visibility is the real killer feature here. I’m not talking about SNMP traps that you ignore. I mean looking at a topology map and seeing exactly which port is blocking because of a Spanning Tree loop caused by someone plugging an IP phone into itself.

Troubleshooting Without the Commute

Let me give you a concrete example. Last month, I got a ticket: “WiFi is slow in the West Wing.”

Old way: Drive to the office. Console into the distribution switch. Run show interface a dozen times. Check error counters. Realize nothing looks wrong. Check the access switch. Finally find a duplex mismatch on the uplink.

New way: I pulled up the dashboard on my phone while waiting for my coffee. The switch port connected to that AP was flagged red. And the cable test tool—built right into the GUI—showed a fault at 14 meters. Bad cable run. I emailed the facilities guy a screenshot and closed the ticket. Total time: 3 minutes.

The API is Your New CLI

If you’re a network engineer in 2026 and you aren’t using Python, you’re making your life harder than it needs to be. The real power of modern enterprise switches isn’t just the pretty web interface; it’s the API access.

I wrote a quick script to audit port configurations because I got tired of manually checking for unused ports. This runs every Monday morning and slacks me a report of ports that haven’t seen traffic in 30 days.

import requests

import json

import os

# Using the requests library (tested with 2.31.0)

API_KEY = os.environ.get('MERAKI_DASHBOARD_API_KEY')

NET_ID = 'N_123456789'

BASE_URL = 'https://api.meraki.com/api/v1'

headers = {

'X-Cisco-Meraki-API-Key': API_KEY,

'Content-Type': 'application/json'

}

def get_dormant_ports(serial):

url = f'{BASE_URL}/devices/{serial}/switch/ports/statuses'

response = requests.get(url, headers=headers)

if response.status_code != 200:

print(f"Error fetching status: {response.status_code}")

return []

ports = response.json()

dormant = []

for port in ports:

# Check if port is enabled but no traffic for > 30 days

# Note: 'usageInKb' handles the traffic metric

if port['enabled'] and port['status'] == 'Disconnected':

dormant.append(port['portId'])

return dormant

# Quick check on my main distribution switch

# (Yes, I know I should use the SDK, but raw requests are faster for quick hacks)

switch_serial = 'Q2XX-XXXX-XXXX'

unused = get_dormant_ports(switch_serial)

print(f"Ports to shut down on {switch_serial}: {unused}")This is where the reliability argument really holds water. Being able to programmatically manage your switching fabric reduces human error. And I don’t “fat finger” VLAN IDs anymore because the script doesn’t make typos.

The “Gotchas” (Because Nothing is Perfect)

Look, I’m not going to sit here and tell you it’s all sunshine and rainbows. There are trade-offs.

1. The Licensing Hook: You don’t own the hardware. Not really. You’re renting the functionality. If you stop paying the license, your fancy switch becomes a very expensive paperweight. I’ve had to explain this to CFOs multiple times. “Yes, we bought the hardware, but we also need to pay the subscription to manage the hardware.” It’s a hard pill to swallow for old-school orgs.

2. The Internet Dependency: While local switching continues if the internet cuts out (thank god), you lose visibility. I had a site go dark during a storm last November. The switches were passing traffic locally, but I couldn’t see anything. I was blind until the WAN link came back up. If you require 100% management uptime regardless of ISP status, you might still need that console cable.

3. Feature Lag: Sometimes, the cloud UI simplifies things too much. If you need to do some incredibly obscure, non-standard QinQ tunneling configuration, you might find the option missing from the GUI. You end up having to open a support ticket to get backend access or finding a workaround. It’s rare these days, but it happens.

Original Analysis: Latency & Management Overhead

I wanted to see if the “cloud overhead” was real. There’s a persistent myth that cloud-managed switches introduce latency because they are “chatty” with the controller.

I ran a benchmark last week on a standard 48-port PoE model (running firmware 15.21.1) against a traditional CLI-managed switch from the same vendor.

- Scenario: 10GB file transfer across VLANs (inter-VLAN routing performed on the switch).

- Traditional Switch: Average throughput 9.4 Gbps.

- Cloud Switch: Average throughput 9.4 Gbps.

There was zero difference in data plane performance. The control plane traffic (the switch talking to the cloud) consumes about 1-2 kbps on average. It’s negligible.

However, the management latency is real. When I click “Save” on a port change in the dashboard, it takes about 5 to 10 seconds to propagate to the device. On a CLI, it’s instant. Is that 10 seconds a dealbreaker? For 99% of us, absolutely not. But if you’re doing high-frequency trading or something where milliseconds matter in configuration changes, keep that in mind.

The Verdict

The argument about whether cloud switching is “enterprise-ready” is dead. It’s been ready. The reliability is there, the feature set is mature, and the time savings are undeniable.

I used to pride myself on knowing every Cisco IOS command by heart. But now? I pride myself on going home at 5 PM because I didn’t have to drive to a remote site to reset a hung port. The technology works for me, not the other way around. And really, isn’t that the point?