The Shift from Monolith to Microservices: A Modern Architectural Paradigm

In the world of software development, the quest for scalability, resilience, and agility is perpetual. For years, the monolithic architecture—a single, unified codebase for an entire application—was the standard. While simple to start with, monoliths often grow into unwieldy giants, making updates slow, scaling difficult, and a single point of failure a constant threat. Enter microservices, an architectural style that structures an application as a collection of small, autonomous services modeled around a business domain. This approach allows massive, complex applications to be developed, deployed, and maintained efficiently. Instead of a single, tightly coupled unit, you have a distributed system of services that communicate over a network, each responsible for a specific business capability. This fundamental shift in Network Architecture and application design has become a cornerstone of modern Cloud Networking and DevOps Networking, enabling organizations to build robust systems that can handle immense scale and complexity with grace.

Core Concepts: Deconstructing the Microservice

At its heart, a microservice is a small, independently deployable application component. The core principles guiding this architecture are designed to maximize autonomy and minimize dependencies, leading to more resilient and maintainable systems.

Key Principles of Microservices

- Single Responsibility: Each microservice is designed to do one thing and do it well. It owns a specific business capability, such as user authentication, payment processing, or inventory management.

- Loose Coupling: Services should have minimal knowledge of each other. Communication happens through well-defined Network APIs, typically using lightweight protocols like the HTTP Protocol. This means a change in one service doesn’t require changes in others.

- Independent Deployability: Because services are decoupled, they can be updated, tested, and deployed independently. A bug fix in the “recommendations” service doesn’t require a full redeployment of the entire e-commerce platform.

- Decentralized Data Management: Each microservice typically manages its own database. This “database-per-service” pattern prevents services from being tightly coupled at the data layer and allows each service to choose the best database technology for its specific needs.

A Practical Example: User and Order Services

Let’s illustrate this with a simple e-commerce scenario. We can have a user-service that manages user data and an order-service that handles customer orders. When a new order is created, the order-service needs to verify that the user exists by communicating with the user-service.

Here is a basic implementation of a user-service using Python and the Flask framework. This service exposes an endpoint to retrieve user information.

# user_service.py

from flask import Flask, jsonify

app = Flask(__name__)

# In-memory database for demonstration

users = {

"1": {"name": "Alice", "email": "alice@example.com"},

"2": {"name": "Bob", "email": "bob@example.com"},

}

@app.route("/users/<user_id>", methods=['GET'])

def get_user(user_id):

"""

Retrieves user details for a given user_id.

"""

user = users.get(user_id)

if user:

return jsonify(user)

return jsonify({"error": "User not found"}), 404

if __name__ == '__main__':

# This service runs on port 5000

app.run(port=5000, debug=True)This service is simple, focused, and can be developed and deployed entirely on its own. It communicates over the Application Layer of the OSI Model using HTTP.

Implementation Patterns: Communication and Data

Building a distributed system introduces challenges that don’t exist in a monolith, primarily around inter-service communication and data consistency. The choice of communication pattern is critical to the performance and resilience of your Network Design.

Synchronous vs. Asynchronous Communication

Synchronous communication is a blocking pattern where the client sends a request and waits for a response. REST APIs over HTTP are the most common implementation. It’s simple and familiar but can lead to tight coupling and cascading failures if a downstream service is slow or unavailable.

Now, let’s see how our order-service would synchronously call the user-service.

# order_service.py

from flask import Flask, request, jsonify

import requests # A popular library for making HTTP requests

app = Flask(__name__)

USER_SERVICE_URL = "http://localhost:5000" # URL of the user service

@app.route("/orders", methods=['POST'])

def create_order():

"""

Creates an order after validating the user exists.

"""

data = request.get_json()

user_id = data.get('user_id')

if not user_id:

return jsonify({"error": "user_id is required"}), 400

# Synchronous call to the user-service

try:

response = requests.get(f"{USER_SERVICE_URL}/users/{user_id}")

if response.status_code == 404:

return jsonify({"error": "User validation failed: User not found"}), 404

response.raise_for_status() # Raise an exception for other bad statuses

except requests.exceptions.RequestException as e:

# Handle network errors, timeouts, etc.

return jsonify({"error": f"Could not connect to user service: {e}"}), 503

# If user is valid, proceed to create the order

print(f"User {user_id} validated. Creating order...")

# ... order creation logic here ...

return jsonify({"message": "Order created successfully", "user_id": user_id}), 201

if __name__ == '__main__':

# This service runs on port 5001

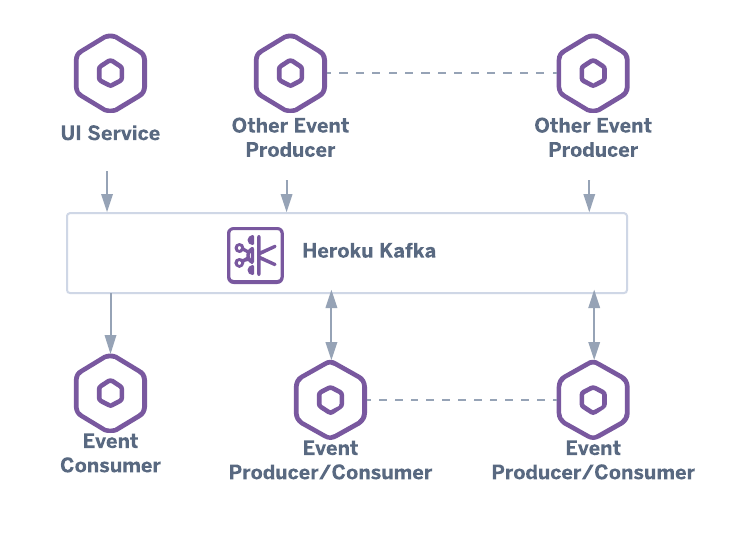

app.run(port=5001, debug=True)Asynchronous communication uses a non-blocking, event-driven model. A service publishes an event to a message broker (like RabbitMQ or Apache Kafka), and other interested services subscribe to these events. This decouples services, improves fault tolerance, and is ideal for long-running processes. For example, after an order is created, the order-service could publish an OrderCreated event. A separate notification-service could then consume this event to send an email to the customer without the order-service having to wait.

Data Management Strategies

The “database-per-service” pattern is a core tenet of microservices. While it grants autonomy, it complicates data consistency. Transactions that span multiple services require complex patterns like the Saga pattern to ensure that a business process is either completed successfully across all services or rolled back properly. This is a significant departure from the simple ACID transactions available in a monolithic application’s single database.

Advanced Techniques and the Broader Ecosystem

As a microservices architecture grows, managing the complexity of service interaction, deployment, and observability becomes a major challenge. A rich ecosystem of tools and patterns has emerged to address this, transforming Network Administration and operations.

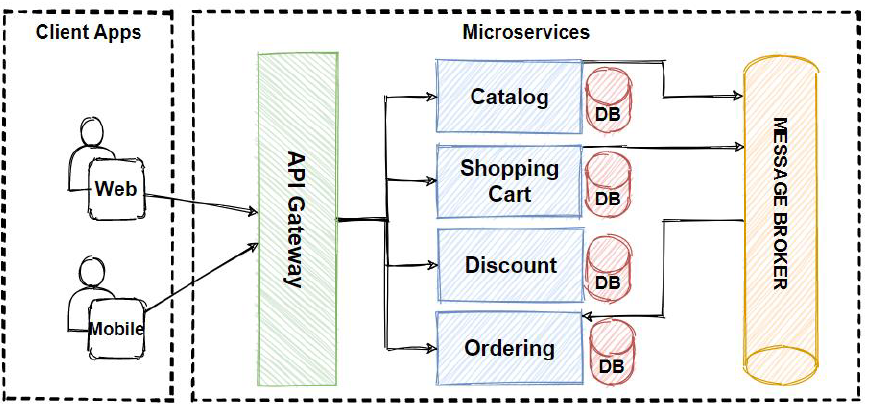

Service Discovery and API Gateways

In a dynamic cloud environment, services are constantly being scaled up and down, and their network locations (IP addresses and ports) can change. Service Discovery tools like Consul or Eureka provide a dynamic registry where services can register themselves and discover others. This avoids hardcoding URLs, a critical aspect of robust Network Addressing.

An API Gateway acts as a single entry point for all client requests. It can handle concerns like authentication, rate limiting, and request routing to the appropriate backend service. This simplifies client-side logic and enhances API Security by providing a single chokepoint for enforcing security policies. Frameworks like Kong, Tyk, or cloud-native solutions like AWS API Gateway are popular choices.

The Rise of the Service Mesh

A Service Mesh, implemented with tools like Istio or Linkerd, takes operational control to the next level. It’s a dedicated infrastructure layer for managing service-to-service communication. By deploying a lightweight proxy (an “envoy” or “sidecar”) alongside each service instance, a service mesh can transparently handle:

- Intelligent Load Balancing

- Resiliency patterns like circuit breakers and retries

- End-to-end encryption (enhancing Network Security)

- Rich telemetry, metrics, and distributed tracing for observability

This abstracts complex Computer Networking logic out of the application code and into the platform layer, allowing developers to focus solely on business logic.

Containerization with Docker and Kubernetes

Microservices are a perfect match for containers. Docker allows you to package each service and its dependencies into a lightweight, portable image. Kubernetes then acts as the container orchestrator, automating the deployment, scaling, and management of these containerized applications. It handles tasks like restarting failed containers, scaling services based on load, and managing network routing between them. Here’s a conceptual Kubernetes Deployment manifest for our user-service:

# user-service-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: user-service

spec:

replicas: 3 # Run 3 instances of our service for high availability

selector:

matchLabels:

app: user-service

template:

metadata:

labels:

app: user-service

spec:

containers:

- name: user-service-container

image: my-repo/user-service:1.0.0 # The Docker image for our service

ports:

- containerPort: 5000

---

apiVersion: v1

kind: Service

metadata:

name: user-service

spec:

selector:

app: user-service

ports:

- protocol: TCP

port: 80

targetPort: 5000

type: ClusterIP # Exposes the service on an internal IP in the clusterThis YAML file tells Kubernetes to maintain three running instances (replicas) of our user-service container and create a stable internal network service to allow other microservices (like the order-service) to communicate with it.

Best Practices and Common Pitfalls

Adopting microservices is a significant undertaking. Following best practices is crucial to avoid common traps that can negate the architecture’s benefits.

Best Practices for Success

- Automate Everything: A robust CI/CD pipeline is non-negotiable. With potentially hundreds of services, manual deployment is impossible. Automation ensures consistency and speed.

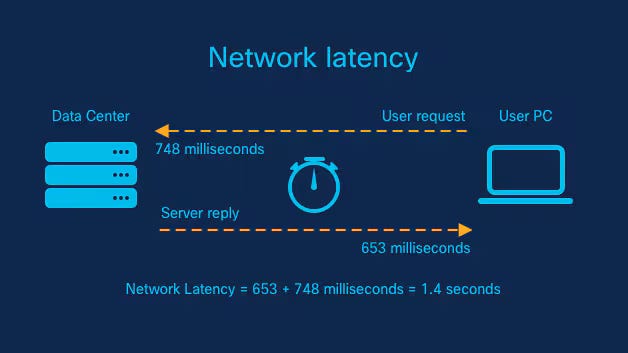

- Design for Failure: In a distributed system, network failures and service unavailability are inevitable. Implement resiliency patterns like circuit breakers (to prevent cascading failures) and timeouts. Low Latency and high Bandwidth are not guaranteed.

- Embrace Observability: You can’t fix what you can’t see. Centralized logging (e.g., ELK Stack), metrics (Prometheus), and distributed tracing (Jaeger) are essential for Network Monitoring and Network Troubleshooting.

- Domain-Driven Design (DDD): Bounding your services around clear business domains helps ensure they are cohesive and loosely coupled.

Common Pitfalls to Avoid

- The Distributed Monolith: This is the most common anti-pattern, where services are technically separate but are so tightly coupled through synchronous calls that they must be deployed together. This gives you all the complexity of a distributed system with none of the benefits.

- Ignoring Network Complexity: Treating a network call like a simple function call is a mistake. Network calls can fail or be slow. The underlying TCP/IP stack has overhead, and issues can arise at any of the Network Layers.

- Data Consistency Nightmares: Without careful planning, managing data consistency across services can become a major headache. The Saga pattern is powerful but complex to implement correctly.

- Premature Adoption: For small teams or simple applications, the operational overhead of microservices can outweigh the benefits. A well-structured monolith is often a better starting point.

Conclusion: Are Microservices Right for You?

Microservices architecture is a powerful approach for building large-scale, adaptable, and resilient applications. By breaking down a complex system into manageable, independent services, it empowers teams to develop and deploy faster, scale with precision, and innovate without fear of destabilizing the entire system. This is especially true for global businesses, such as a modern Travel Tech company serving Digital Nomads, where different components like booking, payments, and user profiles must evolve independently and scale globally.

However, this power comes at the cost of increased operational complexity. Managing a distributed system requires a mature DevOps culture, significant investment in automation and tooling, and a deep understanding of Network Performance and failure modes. Before embarking on a microservices journey, carefully evaluate whether the benefits of scalability and agility outweigh the inherent complexities for your specific project. For those ready to take the plunge, the next steps involve mastering containerization with Docker and Kubernetes and exploring a service mesh like Istio to manage the intricate dance of inter-service communication.