Introduction: The Evolution of Cloud Networking

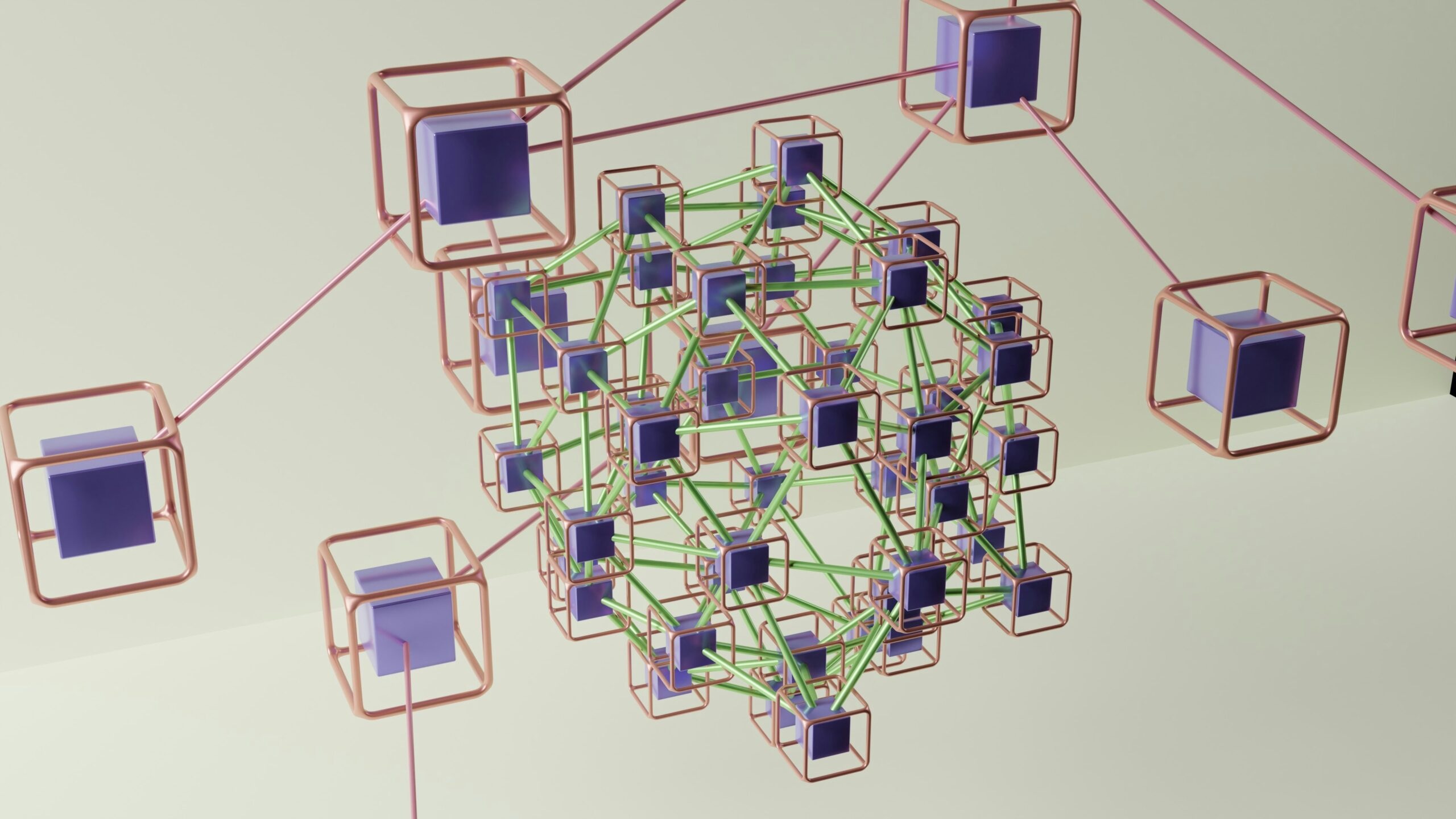

In the rapidly evolving landscape of Cloud Networking and distributed systems, the transition from monolithic architectures to Microservices has fundamentally changed how we approach Network Architecture. As organizations scale their applications to handle millions of requests per second, the complexity of managing service-to-service communication increases exponentially. This is where the concept of a Service Mesh becomes critical.

Traditionally, Network Engineers and System Administrators relied on physical Network Devices like Routers and Switches, combined with static Load Balancing configurations, to manage traffic. However, in a dynamic containerized environment, static IPs and manual DNS Protocol configurations are insufficient. A Service Mesh provides a dedicated infrastructure layer for facilitating service-to-service communications between microservices, often using a sidecar proxy pattern.

This article delves deep into the technical architecture of Service Meshes, exploring how they abstract the complexity of the Network Layers, enhance Network Security, and provide unparalleled observability. whether you are working in FinTech or building the next global Travel Tech platform for the Digital Nomad community, understanding these concepts is essential for modern DevOps Networking.

Section 1: Core Concepts and the Data Plane

At its core, a Service Mesh decouples the application logic from the network logic. In the OSI Model, while your application focuses on the business logic (Application Layer), the Service Mesh handles the complexities of the Transport Layer and session management. It typically consists of two main components: the Control Plane and the Data Plane.

The Sidecar Pattern

The Data Plane is composed of lightweight proxies deployed alongside your application code, known as “sidecars.” These proxies intercept all network traffic entering and leaving the service. This allows for sophisticated manipulation of the HTTP Protocol, HTTPS Protocol, and TCP/IP streams without changing the application code.

Popular implementations like Istio use Envoy Proxy as the sidecar. These proxies handle tasks such as service discovery, Load Balancing, and Network Monitoring. Unlike traditional VPN setups or perimeter Firewalls, the mesh enforces security and policy at the granularity of individual services.

Below is an example of how a Kubernetes deployment is configured to automatically inject a sidecar proxy. While the injection is often automated via Network Automation webhooks, understanding the configuration is vital for Network Troubleshooting.

apiVersion: apps/v1

kind: Deployment

metadata:

name: travel-booking-service

namespace: travel-tech-prod

labels:

app: booking

spec:

replicas: 3

selector:

matchLabels:

app: booking

template:

metadata:

labels:

app: booking

annotations:

# This annotation instructs the Control Plane to inject the sidecar

sidecar.istio.io/inject: "true"

# Customizing proxy resource limits for Network Performance

sidecar.istio.io/proxyCPU: "100m"

sidecar.istio.io/proxyMemory: "128Mi"

spec:

containers:

- name: booking-api

image: travel-booking:v2.1

ports:

- containerPort: 8080

env:

- name: DATABASE_URL

value: "jdbc:postgresql://db-service:5432/bookings"In this configuration, the Network Engineer does not need to worry about the underlying Ethernet frames or Network Cables. The mesh handles the abstraction. When the `booking-api` attempts to call another service, it sends the request to `localhost`, where the sidecar captures it, performs DNS Protocol lookups, and routes it intelligently.

Section 2: Traffic Management and Reliability

One of the primary reasons organizations adopt a Service Mesh is to gain granular control over traffic behavior. In a standard Network Design, Routing is determined by IP tables and BGP. In a Service Mesh, routing is determined by policies defined in the Control Plane and pushed to the Data Plane.

Canary Deployments and Traffic Splitting

Modern Software-Defined Networking (SDN) allows for sophisticated deployment strategies. For example, when rolling out a new version of a Web Services API, you might want to route only 5% of traffic to the new version to monitor for errors before a full rollout. This minimizes the blast radius of potential bugs.

The following example demonstrates an Istio `VirtualService` configuration. This acts as a smart router on top of the Network Layer, directing traffic based on weights rather than just standard round-robin load balancing.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: payment-service-route

spec:

hosts:

- payment-service

http:

- route:

- destination:

host: payment-service

subset: v1

weight: 90

- destination:

host: payment-service

subset: v2

weight: 10

timeout: 2s

retries:

attempts: 3

perTryTimeout: 2s

retryOn: gateway-error,connect-failure,refused-streamThis configuration handles Network Performance issues automatically. If a network packet is dropped or a Latency spike occurs, the sidecar automatically retries the request up to three times. This logic removes the need for complex retry loops within the application code itself, simplifying Network Programming for developers.

Circuit Breaking

To prevent cascading failures—where one failing service takes down the entire system—Service Meshes implement Circuit Breaking. This is analogous to electrical circuit breakers in Network Devices but applied to software requests. If a service detects that an upstream dependency is returning 503 errors or has high latency, it “trips” the circuit and fails fast, preventing resource exhaustion.

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: inventory-service-cb

spec:

host: inventory-service

trafficPolicy:

connectionPool:

tcp:

maxConnections: 100

http:

http1MaxPendingRequests: 10

maxRequestsPerConnection: 10

outlierDetection:

consecutive5xxErrors: 5

interval: 1m

baseEjectionTime: 15m

maxEjectionPercent: 100In this scenario, if the `inventory-service` fails 5 times consecutively, it is ejected from the load balancing pool for 15 minutes. This gives the service time to recover, perhaps while a System Administration team investigates the root cause using Network Tools.

Section 3: Security, Observability, and Network Programming

Network Security is a paramount concern, especially when data traverses between microservices. Traditional perimeter security (firewalls) is insufficient for internal “East-West” traffic. Service Mesh introduces Mutual TLS (mTLS) to encrypt traffic between services automatically.

Zero Trust Network Architecture

With mTLS, every service has a strong identity. The mesh manages the creation, distribution, and rotation of certificates. This ensures that even if an attacker gains access to the network via WiFi or a compromised container, they cannot sniff the traffic (Packet Analysis) or spoof requests without the correct certificate.

Observability and Distributed Tracing

Debugging Latency issues in a microservices architecture can be a nightmare without proper tooling. While tools like Wireshark are great for deep packet inspection, they don’t provide a holistic view of a distributed transaction. Service Meshes integrate with tracing backends (like Jaeger or Zipkin) to visualize the request flow.

However, for tracing to work, the application must propagate specific headers. Even though the mesh handles the heavy lifting of sending spans to the collector, the application must forward context headers (like `x-request-id`) from incoming requests to outgoing requests. This is a critical aspect of Network Development in a mesh environment.

Here is a Python example using Socket Programming concepts wrapped in a Flask application to demonstrate header propagation:

from flask import Flask, request

import requests

import os

app = Flask(__name__)

# List of headers to propagate for distributed tracing

# These are standard B3 headers used by Zipkin and Istio

TRACING_HEADERS = [

'x-request-id',

'x-b3-traceid',

'x-b3-spanid',

'x-b3-parentspanid',

'x-b3-sampled',

'x-b3-flags',

'x-ot-span-context'

]

def get_forward_headers(request):

headers = {}

for header in TRACING_HEADERS:

if header in request.headers:

headers[header] = request.headers[header]

return headers

@app.route('/book-flight')

def book_flight():

# Extract headers from the incoming request

forward_headers = get_forward_headers(request)

# Define the upstream service URL (Service Discovery handled by Mesh DNS)

payment_service_url = "http://payment-service:8080/process"

try:

# Pass the headers to the next service in the chain

response = requests.post(

payment_service_url,

json={"amount": 300, "currency": "USD"},

headers=forward_headers,

timeout=5

)

return {"status": "success", "transaction_id": response.json().get("id")}

except requests.exceptions.RequestException as e:

# Log error for Network Monitoring tools

print(f"Network error connecting to payment service: {e}")

return {"status": "failed"}, 503

if __name__ == "__main__":

app.run(host='0.0.0.0', port=8080)This code illustrates the intersection of API Design and Network Protocols. By forwarding these headers, the Service Mesh can stitch together a complete trace, showing exactly how long the request spent in the `booking` service versus the `payment` service, helping identify Bandwidth bottlenecks or processing delays.

Section 4: Advanced Techniques and Customization

For advanced Network Engineering scenarios, standard configuration might not suffice. Modern Service Meshes allow you to extend the functionality of the data plane using WebAssembly (Wasm) or Lua filters. This brings Network Programming directly into the infrastructure layer.

Imagine you are running a Travel Photography platform and need to strip specific metadata headers for privacy compliance before requests leave your cluster, or you need to implement custom authentication logic that standard REST API gateways don’t support.

Below is an example of an EnvoyFilter (used in Istio) that uses a Lua script to inject a custom header into the response. This demonstrates the power of programmable Network Virtualization.

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: add-custom-header

namespace: travel-tech-prod

spec:

workloadSelector:

labels:

app: frontend

configPatches:

- applyTo: HTTP_FILTER

match:

context: SIDECAR_INBOUND

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

subFilter:

name: "envoy.filters.http.router"

patch:

operation: INSERT_BEFORE

value:

name: envoy.lua

typed_config:

"@type": "type.googleapis.com/envoy.extensions.filters.http.lua.v3.Lua"

inlineCode: |

function envoy_on_response(response_handle)

-- Inject a custom header for debugging or tracking

response_handle:headers():add("X-Travel-Tech-Region", "EU-West")

-- Security: Remove server version information

response_handle:headers():remove("Server")

endThis level of control allows DevOps Networking teams to enforce policies globally without requiring changes to the application code base. It effectively turns the network into a programmable substrate.

Section 5: Best Practices and Optimization

Implementing a Service Mesh is a significant architectural decision. It introduces complexity and resource overhead. Here are key best practices to ensure success.

Resource Management and Latency

Sidecars consume CPU and Memory. In a cluster with thousands of pods, this adds up. You must monitor the Network Performance impact. The “hop” through the sidecar adds a small amount of latency (usually single-digit milliseconds). For High-Frequency Trading or real-time Wireless Networking applications, this might be significant. Always benchmark using Network Tools.

Networking Fundamentals Still Apply

Even with a mesh, you cannot ignore IPv4, IPv6, Subnetting, and CIDR blocks. The underlying Kubernetes CNI (Container Network Interface) must be robust. If your Subnetting is misconfigured and you run out of IP addresses, the mesh cannot save you. Ensure your Network Addressing plan accounts for the scale of sidecars.

Security Posture

Do not rely solely on the mesh for security. Practice “defense in depth.” Use API Security best practices, validate inputs (to prevent injection attacks), and use Network Policies to restrict traffic at the IP level as a fallback. Regular Packet Analysis and audit logs are necessary to ensure the mTLS configuration is actually enforcing the expected rules.

Gradual Adoption

Don’t try to mesh everything at once. Start with the “edge” services or a specific domain (like the checkout flow). Use the mesh for observability first (passive mode) before enabling active traffic enforcement or mTLS. This approach mirrors the Remote Work philosophy of iterative progress and flexibility.

Conclusion

The Service Mesh represents a paradigm shift in Network Architecture for cloud-native applications. By abstracting the Network Layers and providing a programmable data plane, it empowers Network Engineers and developers to build resilient, secure, and observable systems. From handling TCP/IP connections to managing complex GraphQL and REST API traffic, the mesh is the glue that holds modern microservices together.

As we move toward even more distributed environments—spanning Edge Computing and multi-cloud setups—the role of the Service Mesh will only grow. Whether you are optimizing for Bandwidth, securing sensitive data with mTLS, or simply trying to debug a complex distributed transaction, mastering these tools is no longer optional; it is a critical skill for the modern technologist. Start small, experiment with the code examples provided, and leverage the power of Software-Defined Networking to scale your infrastructure confidently.