The Architect’s Guide to Modern Network Development

In today’s interconnected world, the backbone of every digital service, from global financial systems to the apps used by a digital nomad exploring new horizons, is the network. The discipline of Network Development has evolved far beyond configuring routers and switches. It now involves architecting complex, resilient, and secure distributed systems that can withstand failures, scale globally, and maintain data integrity. Whether you’re a Network Engineer, a DevOps professional, or a software developer, understanding the principles of building robust network protocols and services is paramount. This article dives deep into the critical challenges and advanced solutions in network development, covering everything from fundamental protocol design to sophisticated consensus mechanisms and production-ready diagnostics.

We will explore the common pitfalls that can lead to catastrophic failures—such as data loss, deadlocks, and integrity breaches—and provide practical, code-driven solutions. By the end, you’ll have a comprehensive framework for designing, implementing, and maintaining high-performance networks that form the foundation of modern Cloud Networking, microservices, and decentralized applications.

Section 1: The Cornerstone of Trust – Ensuring Chain Integrity

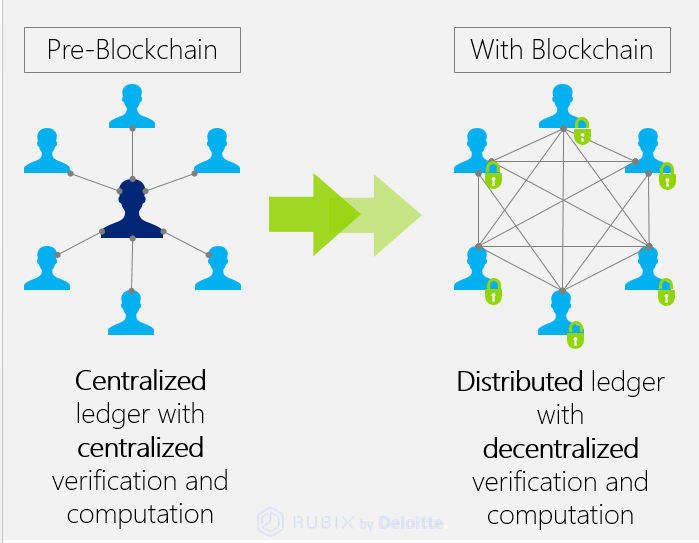

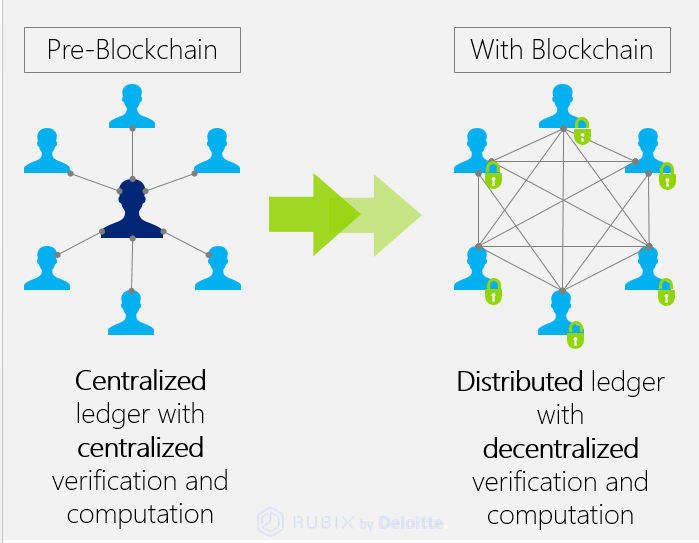

At the heart of any stateful distributed network lies the challenge of maintaining a consistent and unbroken chain of data. In systems like blockchains or distributed databases, each new piece of data (a block or a transaction log) cryptographically depends on the one before it. A break in this chain invalidates the entire state, leading to a complete loss of trust and functionality. This is a common and critical failure mode during the initial phases of Network Design.

The “Broken Chain” Problem

A “broken chain” occurs when a node in the network accepts a new block or data packet without having its immediate predecessor. This can happen for several reasons:

- Race Conditions: Multiple nodes produce new blocks simultaneously, and a receiving node processes a later block before an earlier one arrives.

- Packet Loss: Inherent to TCP/IP and UDP, packets can be dropped, leading to gaps in the data stream.

- Faulty Logic: A bug in the node’s software might allow it to “fall back” to a default or deterministic value if the previous block isn’t found, creating an orphaned block that breaks the cryptographic lineage.

This violates the core principles of the OSI Model‘s higher layers, where data sequence and integrity are supposed to be guaranteed. To prevent this, strict validation rules must be enforced at the application layer before any new data is committed to storage.

Implementing Strict Predecessor Validation

The most effective defense is a simple but non-negotiable rule: a node cannot accept block `N` unless it has already validated and stored block `N-1`. The link between blocks is typically a cryptographic hash. Block `N` contains the hash of block `N-1`, creating a tamper-evident chain.

Here is a Python example demonstrating a basic block validator class that enforces this rule. This type of logic is fundamental in Protocol Implementation.

Blockchain network diagram – Network map of blockchain keywords | Download Scientific Diagram

import hashlib

class SimpleBlock:

def __init__(self, index, previous_hash, data):

self.index = index

self.previous_hash = previous_hash

self.data = data

self.hash = self.calculate_hash()

def calculate_hash(self):

"""Calculates the SHA-256 hash of the block's contents."""

block_string = str(self.index) + str(self.previous_hash) + str(self.data)

return hashlib.sha256(block_string.encode()).hexdigest()

class BlockchainValidator:

def __init__(self):

# A simple in-memory dictionary to act as block storage

self.block_storage = {}

# Genesis block

genesis_block = SimpleBlock(0, "0", "Genesis Block")

self.block_storage[genesis_block.hash] = genesis_block

self.latest_hash = genesis_block.hash

def add_block(self, new_block):

"""Validates and adds a new block to the chain."""

# Rule 1: Check if the previous block exists in our storage

if new_block.previous_hash not in self.block_storage:

print(f"Validation FAILED: Previous block with hash {new_block.previous_hash} not found.")

return False

# Rule 2: Verify the new block's hash is correctly calculated

if new_block.hash != new_block.calculate_hash():

print(f"Validation FAILED: Block hash is invalid.")

return False

# Rule 3: Ensure the previous_hash matches the latest block's hash

if new_block.previous_hash != self.latest_hash:

print(f"Validation FAILED: Chain break detected. Expected prev_hash {self.latest_hash}, got {new_block.previous_hash}.")

return False

# All checks passed, add the block

self.block_storage[new_block.hash] = new_block

self.latest_hash = new_block.hash

print(f"Block {new_block.index} successfully added with hash {new_block.hash}.")

return True

# --- Simulation ---

validator = BlockchainValidator()

last_block = validator.block_storage[validator.latest_hash]

# Correctly add Block 1

block1 = SimpleBlock(1, last_block.hash, "Data for block 1")

validator.add_block(block1)

# Attempt to add Block 3 (skipping Block 2)

block3_broken = SimpleBlock(3, "some_other_hash", "Data for block 3")

validator.add_block(block3_broken) # This will fail

This code snippet enforces the most critical rule of chain integrity. In a real-world scenario, this logic would be part of the core Network Programming loop that processes incoming P2P messages.

Section 2: The Art of Agreement – Implementing Consensus Protocols

In a decentralized system, there is no central authority to dictate the state of the network. Nodes must communicate and agree on the state collectively. This process, known as consensus, is notoriously difficult and a primary focus of Network Architecture. A failure in consensus can lead to network stalls, forks, or deadlocks, where nodes cannot agree on the next valid state, halting all progress.

The Entropy Mismatch Deadlock

One subtle but deadly issue is an “entropy mismatch.” In many systems, a source of randomness (entropy) is used to determine the order of operations, such as selecting the next block producer. If different nodes generate or perceive this entropy differently, they will disagree on who should act next. For example, if Node A believes Node B is the producer for block #31, but Node B believes it’s Node C’s turn, no valid block will be produced or accepted, and the network halts.

This requires a specific consensus protocol to be triggered at critical boundaries (e.g., every 100 blocks) to ensure all participating nodes share the exact same view of the network state and its derived entropy.

An Asynchronous Entropy Consensus Protocol

To solve this without blocking network operations, an asynchronous verification protocol is ideal. When a rotation boundary is reached (e.g., block #30), nodes can start a background process to verify state with their peers.

- Initiate Check: At block #30, Node A sends an `EntropyRequest` message to a random sample of its peers. This message contains its calculated entropy hash for the next epoch.

- Peer Response: Peers receiving the request compare their own entropy hash with the one received. They reply with an `EntropyResponse` message indicating a match or mismatch.

- Tally and Act: Node A collects responses for a short period (e.g., 300ms). If a supermajority of peers agree with its state, it proceeds. If a significant mismatch is detected, it triggers an emergency resynchronization procedure to correct its state before it can participate further.

This approach, often seen in modern Service Mesh architectures for service discovery, prevents a single node with a divergent state from derailing the entire network. Below is a conceptual Python example using `asyncio` to demonstrate the non-blocking nature of this check.

import asyncio

import random

class Peer:

def __init__(self, node_id, entropy_hash):

self.node_id = node_id

self.entropy_hash = entropy_hash

async def handle_entropy_request(self, requester_entropy_hash):

"""Simulates a peer receiving and responding to an entropy request."""

await asyncio.sleep(random.uniform(0.05, 0.2)) # Simulate network latency

is_match = (self.entropy_hash == requester_entropy_hash)

print(f"Peer {self.node_id} responding. Match: {is_match}")

return {"node_id": self.node_id, "match": is_match}

class Node:

def __init__(self, node_id, peers):

self.node_id = node_id

self.my_entropy_hash = self.calculate_entropy()

self.peers = peers

self.entropy_responses = []

def calculate_entropy(self):

"""Simulates calculating a state-dependent entropy hash."""

# In reality, this would be a complex calculation based on recent blocks.

return f"entropy_hash_{random.choice(['A', 'B'])}" # 'B' simulates a divergent state

async def perform_entropy_consensus_check(self):

"""Initiates and manages the asynchronous entropy consensus check."""

print(f"\nNode {self.node_id} starting entropy check with hash: {self.my_entropy_hash}")

# Create tasks for querying peers

tasks = [

asyncio.create_task(peer.handle_entropy_request(self.my_entropy_hash))

for peer in self.peers

]

try:

# Wait for responses with a timeout

self.entropy_responses = await asyncio.wait_for(asyncio.gather(*tasks), timeout=0.3)

except asyncio.TimeoutError:

print("Consensus check timed out. Proceeding with collected responses.")

# Gather results from completed tasks even if timeout occurred

self.entropy_responses = [task.result() for task in tasks if task.done() and not task.cancelled()]

# Analyze results

matches = sum(1 for resp in self.entropy_responses if resp["match"])

total_responses = len(self.entropy_responses)

if total_responses == 0:

print("CRITICAL: No responses from peers. Assuming network isolation.")

return

agreement_ratio = matches / total_responses

print(f"Consensus result: {matches}/{total_responses} peers agree. ({agreement_ratio:.2%})")

if agreement_ratio < 0.66: # 2/3 supermajority

print("CRITICAL: Entropy mismatch detected! Initiating emergency resync.")

# ... trigger resync logic here ...

else:

print("Consensus successful. Proceeding with block production.")

# --- Simulation Setup ---

async def main():

# A network of peers. Peer 4 has a divergent state.

peers = [

Peer(node_id=1, entropy_hash="entropy_hash_A"),

Peer(node_id=2, entropy_hash="entropy_hash_A"),

Peer(node_id=3, entropy_hash="entropy_hash_A"),

Peer(node_id=4, entropy_hash="entropy_hash_B"), # Divergent peer

]

# Our node has the majority state

our_node_correct = Node(node_id=0, peers=peers)

our_node_correct.my_entropy_hash = "entropy_hash_A"

await our_node_correct.perform_entropy_consensus_check()

# Our node has the minority (incorrect) state

our_node_divergent = Node(node_id=5, peers=peers)

our_node_divergent.my_entropy_hash = "entropy_hash_B"

await our_node_divergent.perform_entropy_consensus_check()

if __name__ == "__main__":

asyncio.run(main())

Section 3: Efficient and Safe Network Synchronization

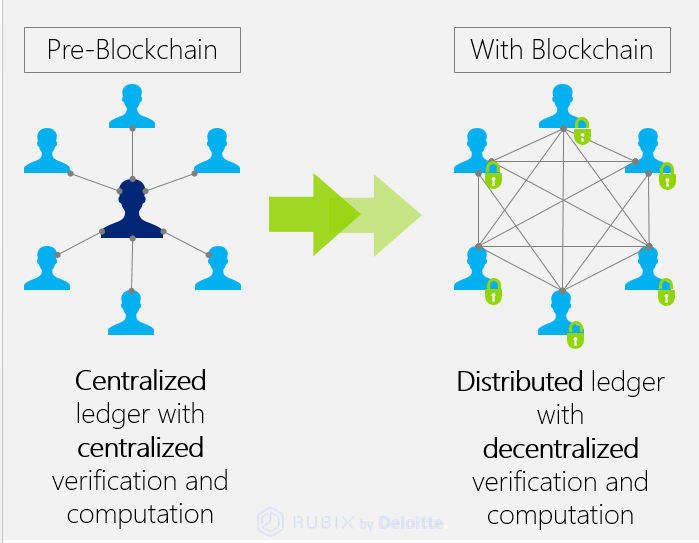

When a new node joins the network or an existing one falls behind, it must "sync" by downloading the missing data. A naive approach of downloading blocks one by one is slow and inefficient, especially for large datasets. This impacts Network Performance and creates a poor user experience. A common optimization is to download data chunks in parallel from multiple peers.

The Peril of Unverified Parallel Downloads

Blockchain network diagram - Network visualisation diagram based on keywords and source title ...

Parallel downloads significantly increase speed by maximizing Bandwidth utilization. However, this introduces a new risk: data integrity. If a node downloads blocks 1-100 from Peer A, 101-200 from Peer B, and 201-300 from Peer C, it has no guarantee that these segments form a contiguous, valid chain. A malicious or faulty peer could serve a fraudulent segment, corrupting the node's entire state. Without a final verification step, this optimization can lead to massive data loss or security vulnerabilities.

Implementing a Secure Syncing Mechanism

A robust synchronization process combines the speed of parallel downloads with the security of sequential verification.

- Range Discovery: The syncing node asks its peers for the latest block number to determine the range of data it needs to download.

- Parallel Download: It divides the missing range into chunks and assigns them to different peers, downloading them simultaneously into a temporary buffer.

- Sequential Integrity Verification: After the downloads are complete, the node performs a fast, sequential check on the buffered data. It starts from the first missing block and verifies that each subsequent block's `previous_hash` correctly points to the hash of the block before it.

- Commit or Retry: If the entire range is verified, the data is committed to permanent storage. If a break is found, the node discards the fraudulent data and retries the download for the invalid range, potentially blacklisting the faulty peer.

This hybrid approach is essential for any large-scale distributed system and is a key component of modern DevOps Networking strategies for rapid node deployment and recovery.

// Conceptual JavaScript example for sync verification logic

class SyncManager {

constructor(storage) {

this.storage = storage; // Interface to persistent storage

}

// Simulates downloading a range of blocks into a temporary buffer

async parallelDownload(startBlock, endBlock, peers) {

console.log(`Starting parallel download for blocks ${startBlock}-${endBlock}...`);

// In a real implementation, this would involve complex networking code.

// For this example, we'll return a pre-made (but potentially broken) list.

const downloadedBlocks = getSimulatedDownloadedBlocks(startBlock, endBlock);

return downloadedBlocks;

}

async verifyAndCommit(downloadedBlocks) {

if (downloadedBlocks.length === 0) {

console.log("No blocks to verify.");

return true;

}

console.log("Starting chain integrity verification...");

let lastVerifiedBlock = await this.storage.getBlock(downloadedBlocks[0].index - 1);

if (!lastVerifiedBlock) {

console.error("Verification FAILED: Cannot find anchor block to start verification.");

return false;

}

for (const block of downloadedBlocks) {

if (block.previous_hash !== lastVerifiedBlock.hash) {

console.error(`Verification FAILED: Chain break at block ${block.index}. Expected prev_hash ${lastVerifiedBlock.hash}, got ${block.previous_hash}.`);

// Initiate retry logic for this and subsequent blocks

return false;

}

// You would also verify the block's own hash and other properties here

lastVerifiedBlock = block;

}

console.log("Chain integrity verification successful. Committing blocks to storage.");

await this.storage.commitBlocks(downloadedBlocks);

return true;

}

async startSync() {

// ... logic to determine missing range (e.g., 101 to 500) ...

const missingStart = 101;

const missingEnd = 500;

const peers = ['peer1', 'peer2', 'peer3'];

const downloadedData = await this.parallelDownload(missingStart, missingEnd, peers);

const success = await this.verifyAndCommit(downloadedData);

if (success) {

console.log("Sync completed successfully!");

} else {

console.log("Sync failed. Retrying with a different strategy.");

}

}

}

// Helper functions for simulation (not shown)

// getSimulatedDownloadedBlocks(), storage.getBlock(), storage.commitBlocks()

Section 4: Production Readiness - APIs, Diagnostics, and Best Practices

A network protocol that works in a lab is not the same as a production-ready system. Production systems must be observable, manageable, and resilient. This requires a strong focus on diagnostics, monitoring, and well-defined Network APIs.

Blockchain network diagram - Network map of keywords related to financial sector 'digital ...

Exposing Network State via APIs

To integrate with other systems (monitoring dashboards, wallets, explorers), the node must expose its internal state through a secure API, such as a REST API or GraphQL. Key endpoints include:

/status: Provides a summary of the node's health, including uptime, block height, and sync status./metrics: Exposes performance counters for tools like Prometheus (e.g., connected peers, transaction pool size)./block/{id}: Allows external tools to query specific data from the chain.

A crucial flag to expose is `NODE_IS_SYNCHRONIZED`. Other modules within the software, or external clients, need to know if the node's data is complete and up-to-date before trusting it. For instance, a wallet service should not show a user's balance until the node is fully synchronized.

Best Practices for Robust Network Development

- Predefined Genesis State: Avoid dynamically generating critical initial parameters like genesis wallets or hashes. A production network should start from a hardcoded, deterministic, and publicly known genesis state to ensure all nodes bootstrap from the same foundation.

- Detailed, Structured Logging: Don't just log "Sync failed." Log *why* it failed. "Chain integrity check failed at block 1,337: expected previous_hash '0xabc', found '0xdef'." This makes Network Troubleshooting exponentially faster. Use structured logging (e.g., JSON format) for easier parsing by log analysis tools.

- Secure Entropy: When randomness is required for leader election or other protocol functions, use a cryptographically secure pseudo-random number generator (CSPRNG) seeded from a source that is agreed upon by the network (e.g., a hash of a recent block).

- Configuration Management: Externalize key parameters like network ports, peer lists, and start times using environment variables (e.g., `QNET_NODE_START_TIME`) or configuration files. This is a core tenet of System Administration and modern application deployment.

- Network Security: Always consider security. Implement firewalls, use encrypted communication channels (HTTPS Protocol, TLS), and protect your API endpoints from abuse. A VPN can be used for secure administrative access.

Conclusion: Engineering for Resilience

Building a robust, decentralized network is a formidable challenge that goes far beyond basic Socket Programming. It requires a multi-layered strategy that prioritizes data integrity, state consensus, and operational observability. By implementing strict validation rules to prevent broken chains, designing asynchronous consensus checks to avoid deadlocks, and combining parallel downloads with rigorous verification, we can build systems that are both fast and secure.

Furthermore, a production-ready network is one that is transparent and manageable. Through detailed diagnostics and well-designed Network APIs, operators can monitor health, troubleshoot issues, and build a thriving ecosystem of tools and services. The principles discussed here are not limited to one technology; they are fundamental to creating the resilient, decentralized systems that will power the future of technology, whether you're working on Software-Defined Networking (SDN), IoT, or the next generation of the web. The journey from a simple operational network to a truly resilient one is paved with meticulous engineering and a deep understanding of what can, and will, go wrong.